Multimodal Deep Learning for Early Detection of Lung, Breast, and Skin Cancer

Keywords:

Cancer detection, Multi-modal deep learning, Explainability, Imaging biomarkers, Early diagnosisAbstract

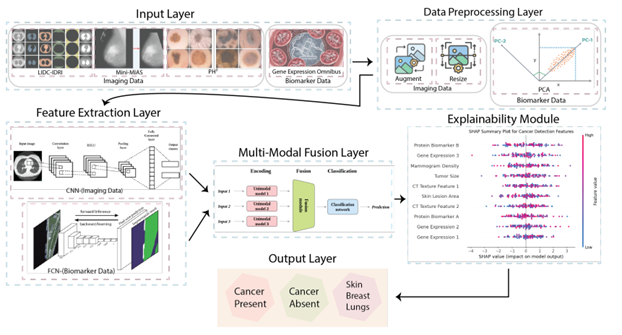

This work presents an innovative multi-modal deep learning framework aimed at early cancer detection through the integration of imaging and biomarker data to improve diagnostic accuracy and dependability. For processing biomarker data, the framework uses fully connected networks (FCNs) and for extracting spatial features from imaging data, it uses convolutional neural networks (CNNs). An attention-based fusion mechanism merges features from both modalities, obtaining supplementary information and enhancing prediction precision. To make sure that the results can be used in real life, explainability methods like SHAP and GradCAM are used to give clear information about how decisions were made. Testing on different datasets, such as LIDC-IDRI, Mini-MIAS, and PH², shows that the proposed framework for finding different types of cancer is strong and can be used on a large scale. The framework sets a standard for AI-driven cancer diagnostics by achieving state-of-the-art performance across key metrics. This opens the door for more reliable, interpretable, and scalable solutions in real-world healthcare applications.

Downloads

Published

Issue

Section

License

Copyright (c) 2026 Inspire Health

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.